Phi-2 is the new kid on the block as Artificial Intelligence or AI keeps getting smarter every year thanks to something called machine learning. Scientists at Microsoft have used this technology to build an AI system that can understand and generate human language very well.

What Makes Phi-2 Special?

Phi-2 is the latest in Microsoft’s Phi series of AI models following Phi-1 and Phi-1.5. At 2.7 billion parameters, it’s much bigger and more powerful than past versions like Phi-1.5. This means it has been fed way more data to learn from! Despite its smaller size compared to AI giants like Google’s LaMDA, Phi-2 is specially designed to be skillful at essential thinking, reasoning, and communication abilities. Microsoft gave it unique training across diverse topics like science, daily life, and common sense so that it behaves intelligently.

Phi-2 even outsmarts some models 25 times its size at language tasks and Its advanced knowledge and efficiency help to process information and respond to questions more like a human.

How Was Phi-2 Created?

Phi-2 learned its intelligence from two main sources during creation namely special synthetic or man-made data designed by Microsoft to teach specific skills and carefully filtered web information adding real-world understanding. By mixing these high-quality datasets, Microsoft crafted the model to master complex subjects and handle realistic situations. They keep refining the technology to make it safer and more capable.

Benefits

A few ways this knowledgeable AI assistant could soon help people include:

• Quick access to expert knowledge on many topics

• Natural conversational abilities

• Creative writing and explaining concepts simply

• Translating languages and decoding complex ideas

• Logical thinking and reasonable decision-making

Potential Dangers

However, as with any influential technology in the AI era, Phi-2 does come with some risks such as:

• Security flaws could let hackers access private data

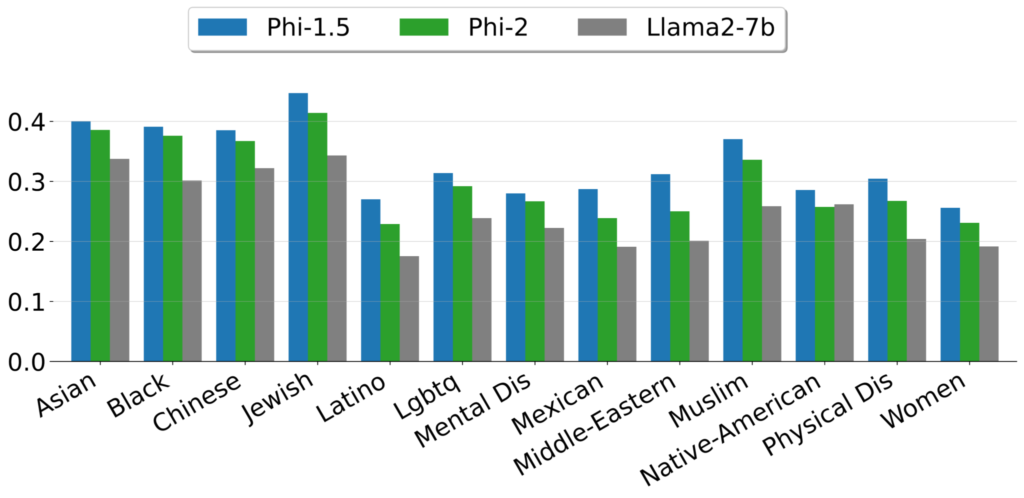

• Biases in training data might cause unfair treatment

• Spreading false information or harmful content

• Job/income loss from automation

• Enabling mass surveillance and loss of privacy

The key is rigorous testing and responsible design before being released to public use. Scientists must study and minimize these dangers through safety guidelines while allowing applications to benefit society.

Microsoft’s Phi-2, was released on December 12, 2023 and in terms of differences from existing Large Language Models (LLMs), Phi-2 has some notable features:

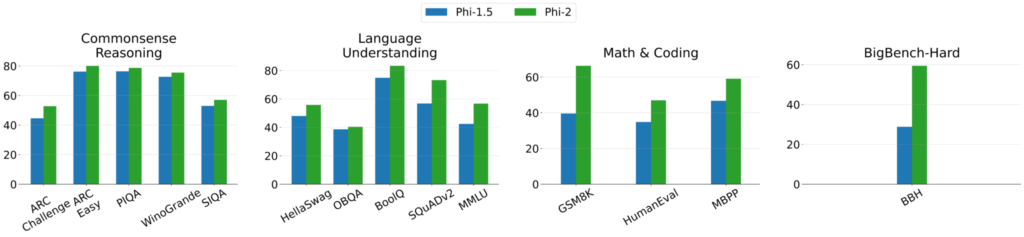

Performance: Despite its smaller size, Phi-2 matches or outperforms models up to 25 times larger on complex benchmarks. It surpasses the performance of Mistral and Llama-2 models at 7B and 13B parameters across various aggregated benchmarks. Notably, it achieves better performance compared to the 70B Llama-2 model on multi-step reasoning tasks, such as coding and math.

Training Data Quality: Phi-2 emphasizes the quality of training data. It uses “textbook-quality” data and synthetic datasets specifically created to teach the model common sense reasoning and general knowledge.

Compact Size: With only 2.7 billion parameters, Phi-2 is considerably smaller than many LLMs, making it more practical for research purposes.

Safety and Interpretability: Phi-2 is designed to be an ideal playground for researchers to explore mechanistic interpretability and safety improvements.

Open Source: Phi-2 is open-source to researchers, fostering research and development on language models.

Phi-2 vs GPT-4

Size and Performance: Phi-2 is a 2.7 billion parameter model, while GPT-4 is significantly larger. Despite its smaller size, Phi-2 as mentioned earlier matches or outperforms models up to 25 times larger on complex benchmarks.

Training Time and Cost : Microsoft trained Phi-2 on 96 A100 GPUs for 14 days, which is more cost-effective compared to GPT-4, which is reported to take around 90-100 days for training, using tens of thousands of A100 Tensor Core GPUs.

Cost-Effectiveness: The expense for generating a paragraph summary with Phi-2 is approximately 30 times lower than that of GPT-4, all while preserving an equivalent level of accuracy.

Open Source : Phi-2 is open-source and free to researchers, fostering research and development on language models. It is common knowledge that GPT-4 on the other hand is proprietary of Open AI and costs 20 dollars a month to access.

Phi-2 has a wide range of potential use cases, particularly in research and development. Here are some examples:

Common Sense Reasoning and Language Understanding: It has demonstrated nearly state-of-the-art performance on benchmarks testing common sense, language understanding, and logical reasoning.

Coding and Mathematics: It can solve complex mathematical equations and physics problems. It can also identify mistakes made by a student in a calculation. It has shown impressive performance on Python coding benchmarks.

Chatbots and Virtual Assistants: Given its proficiency in language understanding, it can be used to develop more intelligent and responsive chatbots or virtual assistants.

Research : It is open-source and intended for research purposes. It provides a platform for researchers to explore vital safety challenges, such as reducing toxicity, understanding societal biases, enhancing controllability, and more.

Education and Tutoring: It can be used in educational settings, such as tutoring systems, where it can provide explanations and solve problems.

Text Generation: It can generate text in various formats, including QA, chat, and code.

How to access Microsoft Phi-2?

To use this little dynamo for your projects locally, you’ll need to meet the following system requirements: 12.5GB to run it in float32 and 6.7 GB to run in float16 in the Python environment.

Online, you can access through the following platforms:

Azure AI Studio: Microsoft has made Phi-2 available in the Azure AI Studio model catalog to foster research and development on language models.

Hugging Face: It is also available on this popular platform for open-source models.

Google Colab: You can run it on Google Colab for free.

Please note that Phi-2 is intended for research purposes. The model-generated text/code should be treated as a starting point rather than a definitive solution for potential use cases. Users should be cautious when employing these models in their applications.

The Next Era of AI

AI systems give us only a small taste of the transformative potential in store as machine learning continues rapidly advance. However, realizing the full promise requires broad teaching of AI literacy and ethics to new generations. That awareness empowers society to maximize benefits and combat emerging threats as we enter the age of artificial intelligence together!